An Update On Facebook Censorship of Nudity, Naked Art and More

In 2014, I published my first article on why Facebook censorship is such a big issue. In the 4 years since, a few things have changed, plus we got a lot more insight on how Facebook (and Instagram, which it owns) moderates the vast amount of content posted to its site every day.

Towards of the end of April 2018, Facebook updated its community standards and released the internal guidelines that govern its content control. The Facebook moderators guidelines were leaked to the public a few years ago, though Facebook has otherwise tried to keep them under wraps. Now they have opted for more transparency regarding what content is prohibited and why.

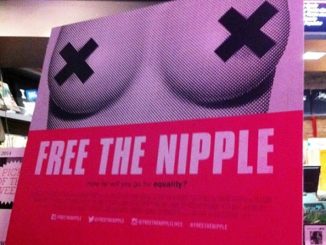

In their new Community Standards, Facebook says that their nudity rules have “become more nuanced over time.” They are now supposedly making more exceptions for allowing nudity, such as when it’s for educational or medical purposes. Or when nudity is being used to raise awareness for a cause or protest. Specifically they note that images of female nipples are also now permitted when it’s depicting “acts of protest.” Does this mean I can post a topfree photo of myself if I am protesting Facebook’s sexist and discriminatory policies? My guess is probably not. I simply don’t understand why women have to be protesting, breastfeeding or have cancer in order to show images that include their breasts / nipples?

Another significant change to the network’s anti-nudity policies is the fact that they now claim to allow childbirth imagery. In December 2017, activist Katie Vigos of the Empowered Birth Project launched a change.org petition directed at Instagram to “allow uncensored birth images.” A month later, Facebook contacted Vigos to tell her they’d be lifting the ban on birth content. This month, the new policy went into effect on both platforms, and the petition declared victory with over 23,000 signatures. (However, company reps have also said they’d been reconsidering this policy for a few years, so presumably this petition pushed them to finally act.)

This article in Harpers Bazaar tells the story of the petition via interviews with Katie, birth activists and photographers and the leading staff at Instagram. It was the first time that I’ve seen FB admit to using an automated bot to filter nudity / porn. Instagram’s Head of Public Policy explains that it identifies visible skin, and they use it to quickly remove porn. But they acknowledge that this is a very limited tool. Maybe their use of it explains why some of the photos shown in this article were banned when they don’t even show any forbidden body parts. Or then again, it’s because Facebook still employs thousands of underpaid and under-trained subcontractors, in other countries, who take a few seconds to review each piece of content amid thousands of posts per day.

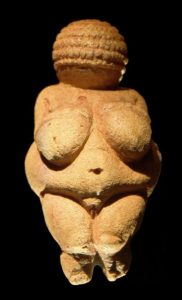

This brings us to the fact that on the censorship front, not much has really changed at Facebook. The “Community Standards,” though lengthier and more detailed, are still applied inconsistently, to the point that they actually become meaningless. Nude paintings and statues, which are supposed to be permitted, are still routinely taken down and censored. Showing even a hint of female nipple can get your entire page banned, and attempts to self-censor one’s nude art are often futile. The lawsuit in France that attempted to challenge the company’s policy on nude art didn’t exactly pan out either (they plan to appeal). So here we are with more lenient content policies, which only create more opportunities for FB to ignore its own guidelines and continue its rampant censorship.

In their April newsroom update, Facebook says they’ve increased their content reviewer workforce by 40% since last year and now have over 7,500 subcontractors. They bring up the fact that they make mistakes in content moderation because sometimes their guidelines are insufficient or because their “processes involve people, and people are fallible.” Problem is, these workers make literally MILLIONS of mistakes as they collectively review 100 million items each month. That’s even if they get it right 99.9% of the time.

The social media giant is now looking to mitigate this problem with a new appeals process (this was part of their big April update). Previously you could only make an appeal if your profile or page was completely banned. Now you can do this when individual posts are taken down. A successful appeal wouldn’t just mean getting the post restored but avoiding a possible Facebook jail sentence (as users like to call it) of 24 hours to 30 days where you can’t post, comment, respond to messages or do anything important. In the future, users will be able to appeal decisions on flagged posts that weren’t removed as well.

But I don’t think we should get our hopes up for this appeal process to fix the “mistakes” or restore “good” accounts. Facebook gave little information on how it works except to say that an appeal would be reviewed by their “team” within 24 hours. As this NPR article points out, “…it remains unclear what relationship the team has with Facebook and with its first-line reviewers. If appeals are reviewed under the same conditions that initial content decisions are made, the process may be nothing more than an empty gesture.”

I had a chance to test out the new appeal option myself this week. I posted a link to a Mel Magazine article about nude beaches that featured me and other naturists. It was flagged / reported for nudity because of the image below. I was given the option to request a review and then was told they’d get back to me with a decision in “48 hours” (not 24). It’s now been over 4 days, and I still haven’t gotten any word on my appeal. In the meantime, my post is made “invisible” until a judgment is made. So I don’t think this new feature is working too well from the get-go!

In 2016, NPR published a fascinating investigation into just how subjective content removal decisions are on the network and thus how inconsistent the censorship truly is. They tested out the hate speech guidelines by flagging 200 different posts and seeing what gets taken down and what doesn’t. Then they asked a company spokesperson for an explanation. To get a Facebook user perspective, they also asked two journalists to weigh in on what they think should / shouldn’t be removed. What they uncovered were very unpredictable moderators and many “errors” in how Facebook acted on these posts. (For example, they’d find a flagged post was removed, but then the spokesperson said it shouldn’t have been, and vice versa on other content.)

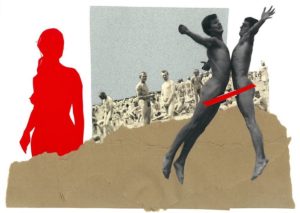

As one would expect, some content removal is straightforward (like a straight-up dick pic would clearly violate the guidelines), but as this article reveals, a lot of other decisions are not so easy. They require some thought and a look at the context. As it turns out, their subcontractors often can’t even see the full context of a post due to privacy or technical reasons. Or in the case of the photo of the “Napalm Girl,” which Facebook was slammed for removing in 2016, they can see the full image but may know nothing about its historical significance. They only glance at it and see child nudity, which isn’t allowed and so they remove it.

The workers are also evaluated on speed and told to work fast – no time for this complex decision-making. NPR calculated that the average worker may be looking at upwards of 2,880 posts per day, and then called it “the biggest editing — aka censorship — operation in the history of media.”

This all leads us to conclude what we already know from experience – the Facebook censorship system is, and has always been, broken.

In a manifesto posted in May 2017, Mark Zuckerberg describes a new possible system where users have settings to control what they see in their feed. So they could, in theory, check a box to filter out nudity, profanity or violence for example. This sounds like what I have always advocated as the solution — Facebook should not be deciding what its 2 billion users can and cannot see. They should instead be giving users more autonomy and control over their own experience.

BUT, Zuckerberg also said that the default settings “will be whatever the majority of people in your region selected” and that they will still block content based on “standards and local laws.” In response to this, many have pointed out the contradiction between capitulating to other countries’ oppressive censorship laws and Facebook’s goal to “make the world more open and connected.” Not to mention the fact that Facebook wants to be more of a news / media operation instead of just a social media platform.

Another disturbing part of the plan outlined in the Zuckerberg manifesto, is Facebook’s push to develop artificial intelligence to do the work of moderation. Because robots can better understand nuance and context?

In the past few months, Facebook has had bigger problems on its plate than content moderation. Over 80 million of its users found out their personal profile data was harvested by a company called Cambridge Analytica. This has amplified the fact that Facebook views users as assets and “products” as opposed to customers. This recent privacy breach has undoubtedly inspired more people to leave the social network, but…not enough to make a real difference or cause a significant shakeup.

Will Facebook ever become obsolete? Or will it continue to prosper as a social network, a “news site” and more recently, an online dating platform?

Time will tell, but for now, I wrote up a list of alternative social networks to Facebook. These online social networks allow a lot more freedom of expression and aren’t looking to steal, sell or market your personal information. If you like these sites, then share them and perhaps we can really lead a mass exodus from the evil empire of social media.